Est. Reading time:

This blog is a shorter intro to Raft, designed for people new to Distributed systems. The source Raft annotated paper : here

Consensus - Intro and history

Typically, fault-tolerant systems require multiple copies of the service running together. This in a technical term is called a Distributed system.

There are two modes these "Distributed systems" can work :

- Single source of truth, i.e. One database.

- A distributed source of truth.

In the first case, despite being a distributed system (from here on out, I am going to call it dist sys), there still exists a single point of failure and bottleneck. Yes, the database in itself may be a dist sys, but that would be considered in the second case a separate system.

It is this second case that truly gives us internet-scale services and the resilience of computer systems we have come to expect in this day and age. But accepting a single value across these multiple copies of the code, is surprisingly a very hard problem to solve. This is what consensus as a concept is.

To agree on a single value on multiple computers spread across a network, with all its faults and slow-downs is what consensus tries to do.

So far, the world has been dominated by Paxos as the de-facto standard for consensus algorithms. But it has its shortcomings :

- The designer himself doesn't have an implemented version of it

- Testing its correctness is very hard A lot of implementations exist, but each of them are different.

This paper introduces Raft, simpler consensus algorithms. One designed with implementation and understandablity in mind.

Raft does this by employing some novel ideas such as:

- Separating leader election from log replication and safety

Safety: A system ensures that at any given point a system cannot be left in a "bad"/"inconsistent" state.

- Reducing state-space

State space refers to the ways the servers can be inconsistent

Consensus algorithms for practical systems usually have the following properties :

- Ensure safety under all non-Byzantine conditions

non-Byzantine: scenarios where nodes are assumed to be reliable and will not act maliciously or deceptively

- Fully available as long as the majority of the nodes are operational.

- Do not depend on a global/consistent timing

Things get very interesting when we have consistent timings, we can simply attach a timestamp to each request and order the logs based on this time. Solves all our consistency problems. But the real world sadly, is full of slow-downs and inconsistent times.

- A minority of slow servers should not affect the response times for requests.

Raft - Introduction

Raft is similar to algorithms such as "Viewstamped Replication", but has its own features such as :

- Strong leader: Log writes flow only from the leader to the follower, this requires a leader to be present at all times

- Leader election: Takes leader election into its own hands, and uses randomized timers to do the same. This in a way promises the liveliness of the system

Liveliness: Something good eventually happens, the system can never be in a stuck state.

- Membership changes: The way raft is designed, allows for configuration changes of nodes (nodes are just fancy terms for computers/servers in the system) without any downtime.

This paper goes in the following order :

- Intro to Replicated state machines (Anyone interested in Automatons, NFA's and Finite machines should know this.)

- Problems with Paxos

- The algorithm itself (fun stuff!)

- Membership changes (we are skipping this)

- Log compaction (we are skipping this too, but this too is a fun part)

We will also follow a similar structure to this post.

Replicated state machines

State machines are simple machines, when given a start state and a bunch of inputs, the resulting state is always the same and known. This is often used in dist sys.

Systems like GFS, and HDFS use a separate Replicated State machine to manage leader election and store metadata of the system (AKA Zookeeper).

Replicated state machines are implemented using a replicated log. This is simply a log of all the inputs thus far. The problem just went from having a consistent state machine across all systems to having a consistent and replicated log.

Replicated log: A series of commands. A consistent replicated log has all the commands in the same

order.

Keeping this replicated log consistent is the job of the consensus algorithms. From there the replicated log can be applied to the state machine to get consistent states across the system.

Paxos

- Paxos is incredibly difficult to understand.

- Paxos allows log entries to grow individually and

meldsall of them together.- Raft doesn't do this. It restricts in ways the log can be appended, keeping it always consistent, but maybe out of date.

- Paxos follows a

peer-to-peersystem with a weak leader, in trade for better performance, this makes things hard for real-world systems.

Do we really need more reasons than the first one?

The Raft algorithm

In raft, new log entries, always flow from the leader to the followers. Reads can come from any of the followers. Given this leader approach, Raft decomposes the algorithm in the following :

- Leader election: A new leader must be elected when an existing leader fails.

Leader is always any one of the nodes in the system.

- Log replication

- Safety, raft promises the following safeties :

- At most only one leader can be elected

- Leaders never overwrite or delete an entry in its log

- log matching, In a given term and index, the values in all consistent nodes are promised to be the same.

- If a given entry is present in a leader in a given term, all leaders in the future will have this entry in their log.

- Only committed log entries are applied to the state machine.

Phew...that was an intense paragraph before this. Most of it probably did not make sense, and that is fine. Cus I haven't been introduced to the actors in this algorithm.

Actors and structure of Raft

- We have the [Actor]leader, Responsible for accepting the writes first and forwarding to the followers.

- [Actor] Followers:

- Can serve reads

- Accepts log append requests from the leader and tries to write it to its log

- Can become [Actor]candidates on leader failure

- Can vote for other candidates when there is an election request, but only one of them

- [State] Term

- This is one weird concept, but simply put. Every time a new Election request is raised, its considered a new term. This is one of the values that is present with every node in the system.

- Terms only go forward, old terms can show up in the system if a node wakes up after some network failures, but our algorithm rejects requests from such nodes.

- [State]Replicated log:

- We have already seen this, but in raft. The log is split into indexes, and each index stores the command and the term it was committed to.

- APIs, we have the following APIs in Raft :

- RequestVote

- AppendEntries

The starting state

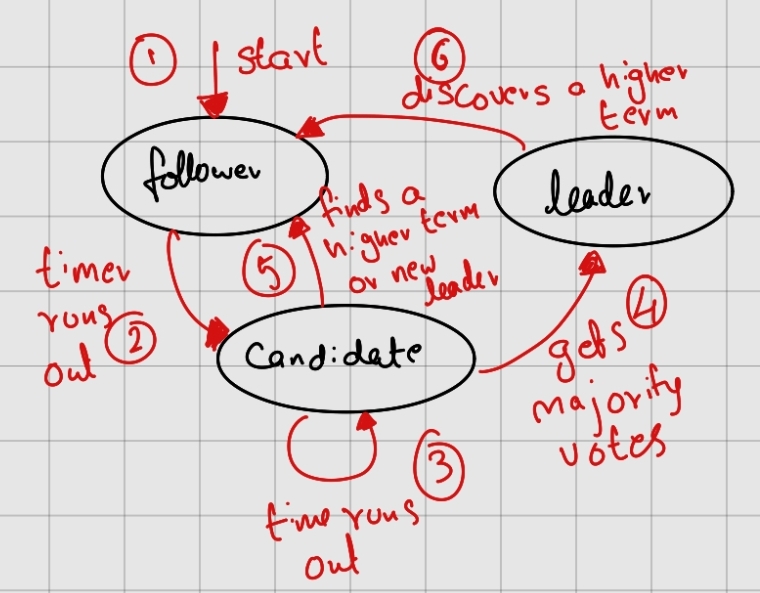

In a given system, all nodes start off as followers. Every node has a randomised timer. When this timer goes off, the follower becomes a candidate and sends a RequestVote call to every node in the system.

Every time a follower becomes a candidate, it increments its term by one. It sends this term in every RequestVote call. Response from the nodes can either be true (when it is voting in favour) or false (against). Along with this, every node sends back its state no matter the voting status.

Now comes a critical part of Raft : If a candidate or leader sees a term greater than its own, it reverts instantly to follower and updates its term. Every time a follower votes in favour of a candidate, it updates its term to the candidate's term.

Raft uses a heartbeat mechanism to trigger a leader election. On every heartbeat from the leader to the follower, the timer gets reset, when there aren't any heartbeats for a said time when the timer runs out, an election is triggered.

Election

When a follower's timer runs out, the following happens :

- Becomes candidate

- increments its term

- votes for itself

- sends out parallel requests to all the followers

When a candidate's timer runs out, it retries the sending RequestVote requests.

From here, there can be three cases :

- It wins the election

- Another server becomes the leader

- A set time goes by with no leader.

Each server can vote to at most a single server. And this combined with majority rule ensures not more than 1 leader can be elected. Votes are given on a first come first serve basis.

Case 1: When a candidate wins the election, it sends a heartbeat message to all the servers.

Every node in the system knows the number of nodes in the system. This is the only way that a candidate will be able to determine if it has a majority. Raft does support membership changes, but thats not something I cover in this post.

Case 2: While waiting for votes, if the candidate receives an AppendEntries request claiming to be a leader if the "claimed" leader's term is greater than or equal to the current candidate's term, it reverts to a follower state and updates its term and its log.

If the "claimed" leader's term is smaller than the candidates, it continues being a candidate.

Case 3: When none of the candidates receives a majority vote, the timer simply runs out and the election restarts. Without measures, this can go on indefinitely. Hence, one of the measures taken is to always have an odd number of nodes in the system.

So, when does a server vote for a candidates request :

Election restriction

The RequestVote request has details about the candidate's log, it contains the latest index and its term. A node will vote in favour of a candidate only if the index and the term of the last entry are greater than or equal to its own. If both the logs have the same term, then the longer log is the latest one.

In short, a vote in favour of the candidate is given only if its log is at least as up-to-date as that of the follower. Combine this with the majority vote, the leader must have the log of the more recently committed (as committed entries are present in a majority of the nodes) to get a majority. I call this a

two-way majorityrule!

Log replication

When a write request shows up, the leader, it parallelly sends AppendEntries request to all the nodes in the system. In case of a slow or failed node, this AppendEntries request is retried indefinitely (Even after the leader has responded back to the client).

The leader decides when it's safe to apply an entry to the state machines. This state is called committed. When the AppendEntries Request has successfully replicated the entries in the majority of the logs in the system, the entry is considered to be committed. The leader includes this committed status in the upcoming, AppendEntries request. When the follower learns that a given entry is committed, it goes ahead and applies it to the state machine.

This way of Log matching ensures two critical properties in the Raft log system :

- If two entries in different logs have the same index and term, they store the same command.

- If two entries in different logs have the same index and term, every entry preceding the said entry is the same.

The first one happens because the leader creates at most one entry with a given log index (Once again attributed to the fact that majority voting of consistent states is required to become a leader)

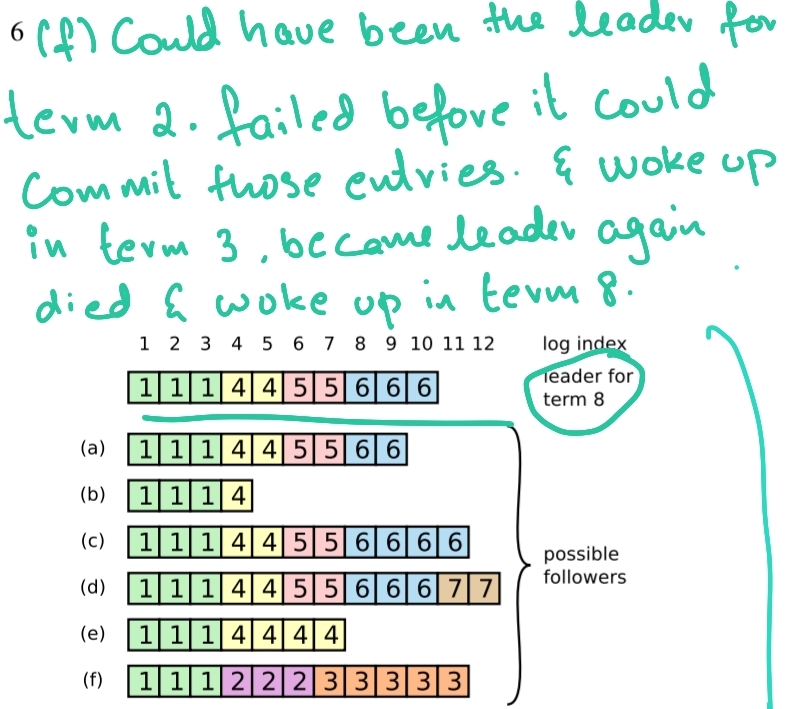

If the follower does not find an entry in its log with the given term and index, it refuses the AppendEntries request. As an example, let's take the following case:

In the above case, we see that some node is the leader in term 8. We also see that node "f" has some entries in terms 2 and 3. These don't seem to appear is any of the other nodes, leading us to believe that those entries were never successfully committed.

If they were, then the current leader couldn't have gotten its majority with its current log. So how does Raft handle this very old and outdated follower?

Conflicting entries like these are overwritten by the leader with entries from the leaders logs. This is considered safe, as we look for a majority while electing this leader with immense power and majority while committing.

These consistency checks are part of the AppendEntries Request, where the leader maintains a nextIndex for each follower. Which is the next log entry index the leader will try sending to the follower. (This reminds me of a pushback trial method). In case the said nextIndex is inconsistent, the nextIndex reduces by 1.

And as stated before, a leader can never overwrite of delete its own logs.

Now, is is possible that the entry was replicated to the majority of the logs, but the leader failed right before it could indicate to all followers that the entry is committed. The new leader will now have these majority replicated but un-committed entries in its log. But a leader will NEVER try and commit log entries by counting replicas from previous terms. It will do so only for the current term. But if an entry from the current term is committed, then all prior entries are also considered committed and applied to the state machine. This is the Log matching property coming to the rescue.

Simple proof that this thing works (Also works as a recap)

Raft promises that if a log entry is committed in a given term, then that entry will be present in leaders of all higher-numbered terms. This is called the Leader Completeness property. Let's assume this property is false, we need to prove a contradiction now :

Suppose a leader from term T leaderT commits a log entry in its term, and say this entry is not stored by leader for some future term U where the leader is leaderU.

- The committed entry must be absent in leaderU at time of its election

- leaderT replicated that entry across majority, and leaderU also receives votes from a majority. This means, in the easiets case, at lest one node accepted the entry from leaderT and voted for leaderU

- It must have accepted append requests from leaderT before voting for leaderU, or else that node would have rejected append requests from leaderT citing a higher term.

- the voted gave its vote to leaderU, which means leaderU's logs must have been up to date with this voter.

- If so, the voter and leaderU

- could have shared the same last long term. If so, the length of leaderU must be at least as long as that of voter. The leaderU must have contained every entry in voters log. This is a contradiction from our initial assumptions

- In second, leaderU's log must not have been longer than voters. In such a case, the voter would have not voted for leaderU all long. This also contradicts our initial case.

This way, we know that the Leader Completeness property is held true, eventually helping prove the correctness of the algorithm itself. Raft TLA+ Specs are written along the same lines, and a working model of this exists for testing.

Timing and availability

As a final note to this post, let's look into the randomized timer that Raft employs. I think it's an understood fact that without a steady leader, the system cannot make progress. With randomized timers being very small, there is a possibility that leaders are constantly changing and no progress is made. For this reason, there is a requirement for this timeout :

broadcastTime << electionTimeout << MTBF

MTBF - Mean time between failures

With that, I hope you learnt something about consensus and raft through this post. Adios.

Annotated raft paper : Raft annotated paper