Est. Reading time:

If you are one to jump into papers directly, here is my annotated version. And here is the original paper [University of Michigan 2001].

The duality of "the internet"

Internet is considered to be both "as fragile as a sleeping baby" and "as robust as a sleeping dad". Where did the internet get this reputation from?

It turns out that the internet is not robust. We are used to never seeing things fail (Thanks to the network engineers and protocols that make this happen). Things go wrong in the physical internet network way too often. Packets are missing, messages are being duplicated, packets have minds of their own, etc. All those 1's and 0's streaming to the supercomputer in your pocket un-dropped and not missing a beat? It's all thanks to smart protocols like TCP.

All it takes is one misconfigured router [Facebook's BGP problem] or one faulty algorithm to pull an entire service from the face of the earth. Internet-scale companies know the cost of outages, which is why they spend billions inventing these ways to make services reliable and robust, despite the faulty nature of the internet.

The world is way too interconnected and internet-dependent to accept outages and faults as a common thing.

Enter TCP, with its fancy flow control and delivery guarantees, most services use TCP as the de-facto standard unless their use case calls for something else. This piece I've written here is talking about one Flow Control algorithm named: SFB: Stochastic Fair Blue - A Queue Management algorithm for enforcing fairness.

TCP Flow control

Before we jump into the fancy fun stuff, we got to know what TCP flow control is and how it works.

Packets are dropped when the receiver is unable to process packets fast enough and has no more space to store your packets for processing later. Well, What way can one avoid this? Simply...do not send the packets :kek:.

Ok, I agree. That's a very ingenious solution. Butttt, how do we know when we should stop sending messages? This is the exact problem TCP flow control deals with and solves for us.

Packet Loss Is OK

Packet loss is crucial to the functioning of TCP Flow control. Measuring the number of dropped packets is how our protocol knows if it should request the service to slow down. If it's as simple as requesting a service (sender) to slow down, would this ask for a detailed research paper and a blog?

In the initial TCP SYN-ACK handshake the opening window size is provided. This window size is represented using a bitfield. We as a sender should respect and send only how much it says is ok. But if this window size is too small, we will not be able to saturate the sweet sweet bandwidth we paid a bomb to get. This "receive window" (rwnd) is the maximum number of unacked messages we can allow to be in flight at any given time. This rwnd is given to us in every "ack" message.

To quote a website [1]:

If either the sender or receiver is frequently forced to stop and wait for ACKs for previous packets, then this would create gaps in the data flow, which would consequently limit the maximum throughput of the connection. To address this problem, the window sizes should be made just big enough, such that either side can continue sending data until an ACK arrives back from the client for an earlier packet.

I hear you, "That's enough about TCP flow control, start talking about SFB!!!". Just hang with me for a couple more paragraphs.

A lot of devices use a single buffer across connections, meaning your packets and mine stand in the same queue to be serviced. So if you, as a sender pay no heed to the rwnd, you can saturate your bandwidth and deny me of my worldly internet pleasure.

The internet is a very fair place, we treat everyone the same...everyone gets the same content (Don't quote me on region blocks...sigh!). Hence, it is of utmost importance to protect routers/servers/switches from users like you who will pay no attention to the rwnd signals.

This is where we have algorithms like RED and BLUE (Yes, Pokemon red and blue xD), as cool as they sound they had flaws. These two algorithms maintain a probability p, which is increased as the buffer gets full and reduces when the buffer is empty. As packets enter the buffer they are dropped with a probability of p.

But the issue with BLUE and RED were [2]:

The main flaw of Blue, which it shares with most single-queue queuing disciplines, is that it does not distinguish between traffic flows, but treats all flows as a single aggregate. Therefore, a single aggressive flow can push packets out of the queue belonging to other, better behaved, flows.

A trivial solution would be to start maintaining a state for each flow and flow and increase/decrease their probability of being dropped based on how rash they are, this would call for a very large state safe...maybe even bigger than the buffer itself just to store metadata about flows. NOW, we are ready to talk about SFB.

SFB: The fair algorithm

Instead of maintaining state for each flow and using hashmap to maintain them, we use bloom filters3. They are a probabilistic data structure. In dumb engineer terms, they can with certainty say that they haven't seen something before...but say with a maybe that they have seen something before.

Bloom filters, in their simplest form contain a single level of bins (that store 1 or 0) with a single hash function. If your input hashes to some bin with a pre-existing 1, we can conclude that we may or may have not seen this input before. If it hashes to a bin with 0, we can 100% definitively tell we haven't seen this before.

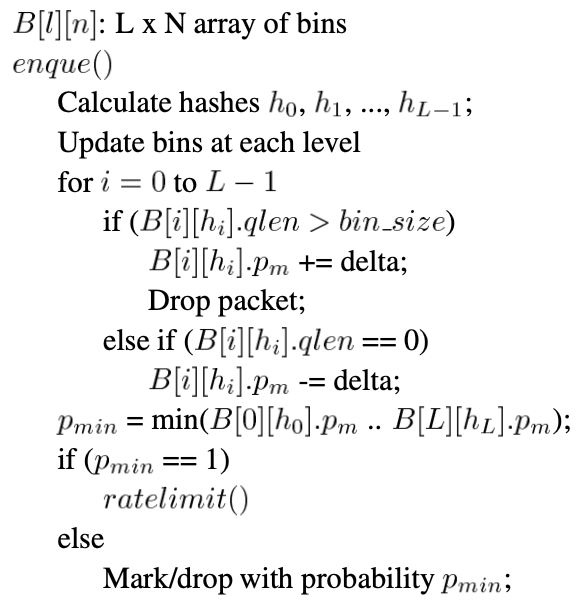

But a single level with a single hash function can lead to a lot of maybes and equally so false positives. SFB uses multiple layers of bins, each with its hash function. This reduces false positives by a lot with not much increase in memory footprint. SFB in each bin also stores a "p" value. The probability is increased when the buffer is full. And reduced when empty.

Each packet highlights one bin in each level. We take the minimum of all the "p" values from all the bins, unless a "fair" flow hashes to those same bins as the "rash" flow, at least one bin should have a low "p" and hence that takes effect!

The algorithm looks something like this [3]:

So this means that if a flow is sending packets even when the buffer is full, we can target it and reduce its bandwidth allocation! Fairness is restored and justice is served. The egalitarian way.

The paper also draws comparisons with other single queue algorithms. I will not be going into those here (honestly, it's a blog...what did you expect). But we still have a problem with misclassified flows permanently being punished just for being hashes similar to a rash flow and those rash flows that learnt their lesson and became good bois.

SFB puts forth some solutions for this too!

They propose a moving hash function. Where the hash functions that are hashing to the bloom filters are changed every 2 seconds and the bins are reset. Now this will let the rash flows capture more than they deserve. For this, they propose a 2-bin process. To quote the paper[4]:

One way to solve this problem is to use two sets of bins. As one set of bins is being used for queue management, a second set of bins using the next set of hash functions can be warmed up. In this case, any time a flow is classified as non-responsive, it is hashed using the second set of hash functions and the marking probabilities of the corresponding bins in the warmup set are updated. When the hashes are switched, the bins which have been warmed up are then used. Thus, non-responsive flows are rate-limited right from the beginning.

Conclusion

TCP as an algorithm is super cool, and its flow control is even more so. But it is really hard to keep the internet a free and fair place. Algorithms like BLUE, RED and SFB are critical to our modern-day internet-fueled world.

But at the same time, TCP and its reliability come at a cost of higher latency and lower throughput. It's not always needed. Hence, modern-day streaming services use UDP with some ECC (The user might not even observe a couple of dropped frames!). Which is something that interests me and I might look into it someday. But that's wrapping up this post :). Adios

References/Citations:

- [1] https://hpbn.co/building-blocks-of-tcp/#bandwidth-delay-product

- [2] BLUE Queue Management

- [3] https://en.wikipedia.org/wiki/Bloom_filter

- [4] https://rtcl.eecs.umich.edu/rtclweb/assets/publications/2001/feng2001fair.pdf