Est. Reading time:

Part 3 - Implementation

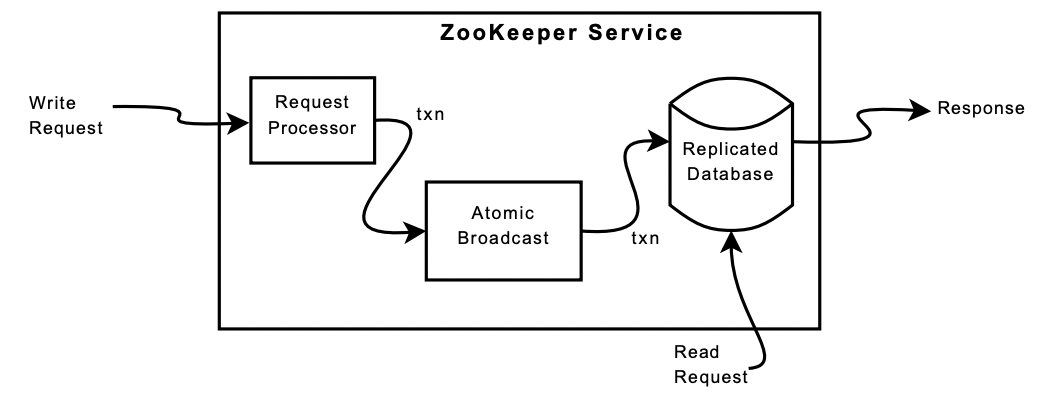

In this final part, we'll see how ZooKeeper is implemented internally. The scope of this post is rather shallow, we are only going to be looking at its components and their interactions. We do not jump into trying to understand how it satisfies its guarantees.

Generic Architecture

We see the following arch in Zk :

ZK Replicates all the znodes across its ensemble (In an in-memory DB). Read requests are served locally. If a given operation requires co-ordination, it makes use of the Agreement Protocol.

The Agreement Protocol is an atomic broadcast protocol. This atomic broadcast protocol has a leader, all these "co-ordination" requiring operations are sent to the leader. The leader then broadcasts this operation to all the followers. Once a majoirty quorum of followers have acknowledged the operation, the leader can then apply the operation to its local state.

How this atomic broadcast protocol is implemented and how it selects these leader and followers is beyond the scope of this post. But I suspect something similar to raft.

Request Processor

Requests sent to Zk by clients are not idempotent. This Request Processor forms transactions that are idempotent and hence makes it easier to process such transactions using the atomic broadcast protocol.

It computes the current state, and state after the given operation, and makes all this as part of the transaction.

As an example :

if a client does a conditional

setData and the version number in the request matches

the future version number of the znode being updated,

the service generates a setDataTXN that contains the

new data, the new version number, and updated time

stamps. If an error occurs, such as mismatched version

numbers or the znode to be updated does not exist, an

errorTXN is generated instead.

ZooKeeper Atomic Broadcast Protocol

ZAB. ZAB works though a majority quorum. It guarantees that changes broadcasted by the leader are delivered in order and exactly once in normal operations. In some recovery situations, it might delivers a txn more than once. But txns being idempotent, this does not matter.

ZAB makes use of TCP to make it job a little but easier.

ZAB uses a rather unique way to ensure that leader is live :

- If there are pending transactions that commit, then the server does not suspect the leader.

- If the pending queue is empty, the leader needs to issue a null transaction to commit and orders the "co-ordination" op after that transaction. This has the nice property that when the leader is under load, no extra broadcast traffic is generated.\

Client - Sever

Each request has a zxid, generated by the server (probably a monotonically incrementing number). This zxid is used to ensure that the client and server are in sync. When the said client connects to a new server in the ensemble, the server checks to see if it has atleast seem things upto the zxid of the client. If not, the server does not establish connection until it has caught up.

fin.