Est. Reading time:

This blog was written for a presentation on Basic of Consensus

*lity of backend systems

In the age of internet scale service, we inherently expect services to be always present, 100% accurate and instantaneous. Or in verbs :

- Reliability : Being always correct

- Availability : Being always present

- Scalability : Being fast for any number of people

- Latency : Being instantaneous

For a successful system, reality must take precedence over public relations. - Richard Feynman

And nature does everything in its power to make sure the above properties don't hold true.

Hardware fails, People live far from your servers, Load is unpredictable, Network is unreliable.

How to build for internet scale then?

Requirements :

- Should be able to be un-fazed by hardware and network failures

- Should be low latency no matter where your users are

- Should scale

- Should always be available

So, maybe...Throw costly hardware at the problem?

Hardware cost don't scale linearly with the spec of hardware. And this still doesn't solve the problem. - Hardware can still fail - This costly hardware and be far from your user

Multiple computers but one storage...Shared DB architecture?

A good start! But there still only exists one copy of the data. If that fails, your system is down. Sounds enticing, as its easy to build such systems, helps with scale and latency. But we are one data stores failure away from being unavailable.

So maybe make multiple copies of the data on different systems?

Ah! You just described a distributed system. - This would be the perfect solution, but this isn't as simple as it seems. Even here, we can have two, but both have a distributed system in them :

The enemies of distributed systems

Turns out, enemies of distributed systems are a subset of internet scale systems. We just moved the problem to a different layer.

- We need data to be consistent across all the copies on different systems - Slow network doesn't help

- The systems holding the copies can fail, again...sigh - We need to be available even on failures

Funnily enough, given more replicas in the system, higher probability of failure of a given system.

Most of problem with replication lies in the changes to data. We need to make sure the system as a whole is consistent and these replicas agree with each other when a value is read. - This is Consensus.

Problems with Consensus - The potato and Ferrari cart

Asynchronous vs synchronous

Let's assume an online storePotatoZon uses a distributed system to handle traffic.

- A user

aadds a potato to the cart (Lets say this request went to ServerQ). - And also goes on to add a Ferrari to the cart (This also goes to server

Q). - He opens his cart and sees both the items in it (Let's assume this goes to server

Z).

Luckily, both

QandZreplicas were up-to date and consistent.

- He looks at the price and finds it to be too much and decides to remove the Ferrari from the cart. (This goes to server

Z).

Say, right after the request to remove the Ferrari, server

Zcrashes but not before replicating to serverX. As PotatoZon uses asynchronous replication, even though the changes were not seen by serverQthe user got confirmation for his Ferrari removal.

- He decides to check out and buy the potato. (This request goes to server

Q).

Oh no...he sees that both Ferrari and the potato are ordered. A costly mistake.

Remember, server Q did not see the change of removal of the Ferrari from cart, but handled the checkout.

Asynchronous replication : application developers can build on “weakly” consistent storage models that do not use coordination; in this case developers must reason about consistency at the application level

You might say: Navin, let's just use synchronous replication and wait for all replicas to acknowledge the writes. In which case, the checkout would have not even happened as server Q crashed.

(I'm sorry for the tiny size, atleast it's a SVG, so feel free to zoom)

Strong consistency can be enforced in a general-purpose way at the storage or memory layer via classical distributed coordination (consensus, transactions, etc.),

but this is often unattractive for latency and availability reasons.

It's a shame that we can't even have be resiliant to one failure if we have to correct

Split brain issue

Now if your seasoned with distributed systems, you might propose this solution :

- Lets keep a heartbeat to keep track of what all replicas are alive

- And except a response only from those that are alive

- When the dead recovers, we can make sure its upto date and then start expecting responses

This way, it seems like we maintain consistency and availability. But this is not the case. Right?

Let's again take the same example of PotatoZon. Three server Q, Z and X. And eventually server Z seems like dead to Q and X.

But what if the network between the nodes were partitioned? So Z is still alive and can accept requests, but Q and X can't reach it and vice versa. So in this case :

Zwould assumeQandXare dead- accepts read and write requests from the users

- And now we end up with two different versions of the data.

- And when the network does restore, we have no clue which was the correct version of the data.

Yikes! So messy. This is called Split brain issue.

GitHub actually had a split brain issue in 2018, leading to downtime and messy data.

Really interesting stuff : https://www.youtube.com/watch?v=dsHyUgGMht0&ab_channel=KevinFang

So how is this problem solved in real life? Introducing Consensus algorithms. There are many Consensus algorithms with different gaurentees and properties. But two widely used ones are Paxos and Raft. We'll look at what Raft does in a very high level.

Raft - The consensus algorithm

We do not have the time to see Raft in detail, but if interested : here

Raft operate with a leader-follower model. But a weak leader to be able to handle leader failures.

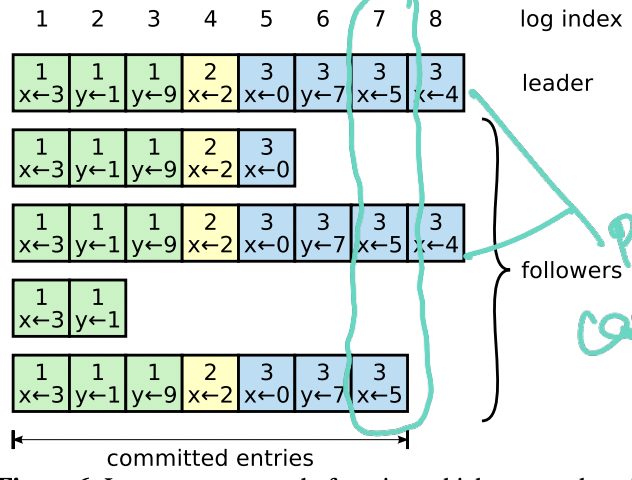

Raft operates on a state machine model, where each replica holds the log of writes, like this :

The number on top of each entry is a term number. Every write will be associated with a term number.

There exists heart beats between followers and leader, and each follower has a randomized timeout. If it doesn't receive a heartbeat in that time, it will become a candidate to try and become a leader.

Algorithm :

-

A follower becomes a candidate when its timer run out

-

Each follower's random timer is reset when it receives heart beat from the leader

-

A leader is selected using a voting system

- To be elected, a candidate must have majority of votes

- When a candidate asks for a vote, it sends its latest term number and index of last log entry.

- A follower will vote for a candidate if :

- It has not voted for any other candidate of a higher or same term

- Data in leader is (term number and log index) upto date with the given follower.

- Once a candidate is elected, it becomes the leader and announces it to all followers

So this seem like the leader must have all the membership data to know what the majority is. Raft has some algorithm to hand this as well. We will not go into this.

-

Writes only go to the leader

-

A write is considered committed when it is replicated to a majority of the followers

-

If the leader observes a given follower is behind, it sends its data for the follower to catch up. (This is actually safe as the leader could have become one, ONLY if it had all the committed writes)

-

Reads can go to any follower.

This plays well into our issues, where majority writes make sure that even if some nodes are down, we can still go through writes.

Reads can come from any replica, so we can have low latency reads.

Correctness - really quickly

Raft has a two-way majority :

-

A given write cannot be committed unless its replicated to a majority of the nodes

-

A leader cannot be elected unless it has a majority of votes

This means a given leader can become a leader ONLY if it has the latest committed write. Making sure we are always consistent!

Some really shrewd eyed amongst you might say,

What if there were 6 nodes, and one candidate got vote from 2 of them and another candidate from another two. Where the connection between these three have been cut off.

Well, there exists a simple solution to this. Make sure that number of nodes in your cluster is always odd.

Really, the solution to that is that simple xD

And if more than majority of the nodes are down, the system becomes read-only and none of the writes pass through. Which is an acceptable trade-off in worst case situations.

Conclusion

Consensus is a fundamental building block of distributed systems. It allows us to build reliable, available, and scalable systems that can handle failures gracefully. In this presentation, we saw majorly the issues that consensus solves for us and on a very high level how Raft works.

Adios.